A client called that I hadn’t heard from for several years. They had a new flagship product line that had some major problems. Customers were furious, all the original programmers had left, and something needed to be done fast. So they asked if I could come out for six months or so to straighten things out.

The products were expensive laboratory grade instrument modules (costing tens of thousands each) that were designed to be stacked and work together to gather and consolidate data. But there were problems with the communications between the modules and the host PC. Customers would spend hours preparing for a test, and a few more hours to run it. But partway through the test, the system would suddenly hang up, data would be lost, and the test would need to be run over again from scratch. And there were all kinds of other mysterious and problematic symptoms that were hard to find and even harder to explain.

The individual instruments were extremely reliable, and had been for years. The problem was with the added inter-module communications, both the hardware and the software. The instruments were designed to talk to a single host, not a network. So the company designed add-on boards to allow for a mini-network of instruments using CAN bus communications hardware and a proprietary communications protocol. The new boards also provided a USB connection for a host PC to monitor and download the test data.

There were many problems, but here are the main ones:

Poor protocol design

When communications problems exist, I look for two things — the documentation describing the protocol and some tools to capture and analyze the communications bus traffic to see what is really going on. They had neither.

A protocol is a specification of the formatting of the data and messages that will travel between devices. The design of the protocol will usually provide a way to minimize the chance of errors and detect them when they occur, typically through some form of checksum or error correcting code. And it will anticipate the different errors that might occur and describe how to handle them so normal communications can resume. A protocol document allows all the scenarios to be thought out ahead of time, and for peer review and corrections to polish the plans to provide a bulletproof scheme.

They didn’t have a protocol specification document. And they had never gone through the design process. Instead, the ad hoc protocol evolved over time with more and more patched on to it as new issues arose, with little to no documentation. If anyone had ever seen the big picture of what was being created, they would have realized that it was a mess and a minefield of problems waiting to happen.

Since all of the original programmers were gone, we spent months trying to understand what it was supposed to do, while also trying to figure out why it kept failing and how to fix it.

In an ideal situation, you would have a well thought out and documented protocol specification and tools to sniff the communications and capture snapshots of any faults. Within days you would find the problem and solve it. Instead, the days or weeks they saved by skipping these steps meant months of time spent on the back end trying to clean up the mess. Even worse, there was some serious damage to the company’s reputation and sales.

Lack of safeguards

The system included thousands of individual settings for calibration and configuration. But these settings were unprotected and could be wiped out or changed accidentally in an instant by an errant command, all without any warning or indication that it had happened.

The protocol had no provision to identify what type of data packet was being sent, such as a packet wrapper describing the contents. The receiver was supposed to know when the sender was changing states from, for example, a command mode to a file transfer. But if the mode switch was lost or delayed, the receiver could see the file and try to interpret its bytes as commands, potentially wreaking havoc as it went nuts doing all kinds of random things it wasn’t supposed to do, such as overwriting critical settings.

Bottlenecks

There was a big problem with getting the data out of the system. After a four hour test, it could take an hour or two just to transfer the data file to a PC for analysis. There were two big bottlenecks:

First, the CAN bus connection between the modules was being run at 125,000 bits per second. That might sound like a lot, but standard USB speeds are nearly 4000 times faster. So a file that takes only a second to transfer over USB would take over an hour on the CAN bus! (Actually, it is even worse than that since the CAN bus has a very high overhead, using 5 to 8 bytes of wrapper around each tiny 8-byte packet. So the effective data rate is close to half of the bit rate.)

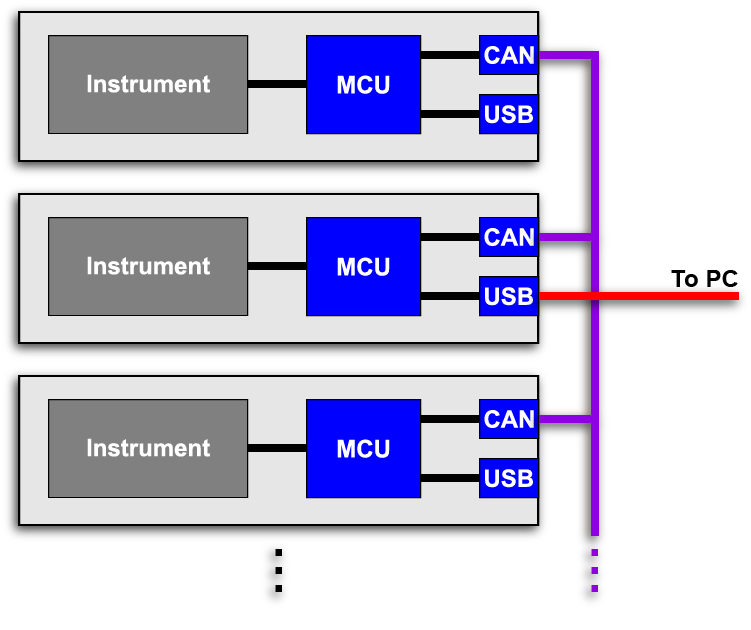

Red = USB (480,000 kbps)

Purple = CAN bus (125 kbps)

Black = plain asynchronous serial (115 or 230 kbps)

The diagram above shows the communications flow between the different components. Each module consists of a laboratory instrument and an interface board which includes an MCU and a CAN and USB interface. The different modules in a stack talk to each other through the CAN bus backbone. And a host PC could monitor or download the data through a connection to one of the modules’ USB ports.

Since you could never know which module the PC might be plugged into, the programmers decided to make each module keep a copy of every other module’s data. This would avoid the bottleneck of using the CAN bus to do large file transfers since every MCU could send it all directly to the USB port. But it didn’t work, and everyone was scratching their head wondering why the PC transfers were so slow. One other bottleneck had been overlooked.

The product specs could say that they had a USB 2.0 standard port, capable of 480 million bits per second rates. But do you see the black line that transfers data from the MCU to the USB adapter? That is a standard serial port line limited to only 230 thousand bits per second, about 2000 times slower than the USB port. It’s like trying to supply a firehose with a narrow IV tube. The flow will never be more than a dribble.

File transfers took so long that customers would instead attach a dedicated PC throughout the test to download the data as it happened. Or they would take the modules apart to get to the CompactFlash memory cards that were hidden inside.

Eliminating the communications problems

One thing that became clear over time was that the problems were even worse than we thought. It seemed like a bottomless pit of new issues to discover and fix. New product plans were in motion, so it was time to step back and consider changing the architecture rather than perpetuating such a troublesome design. My proposal was accepted, so I stayed on for another year to implement the new system.

fast and powerful at a low cost

When I first started designing electronics years ago I noticed something interesting. Some chips that had way more features and capabilities could be far less expensive than some less capable alternatives. The reason was volume. If a chip was used in a high volume product like phones or personal computers, then the volumes would drive the price way down and I could take advantage of that even in a low volume product.

The smartphone market has made some incredibly powerful, tiny and highlight integrated chips available at extremely low prices. System-on-a-chip devices with a powerful CPU, DSP, Video, Audio, Ethernet, USB and more are available in a single chip costing just a few dollars. Add some memory, connectors and an operating system and you have a powerful computer. That has led to the creation of powerful single board computers covering just a few square inches, perfect for dropping in an embedded system.

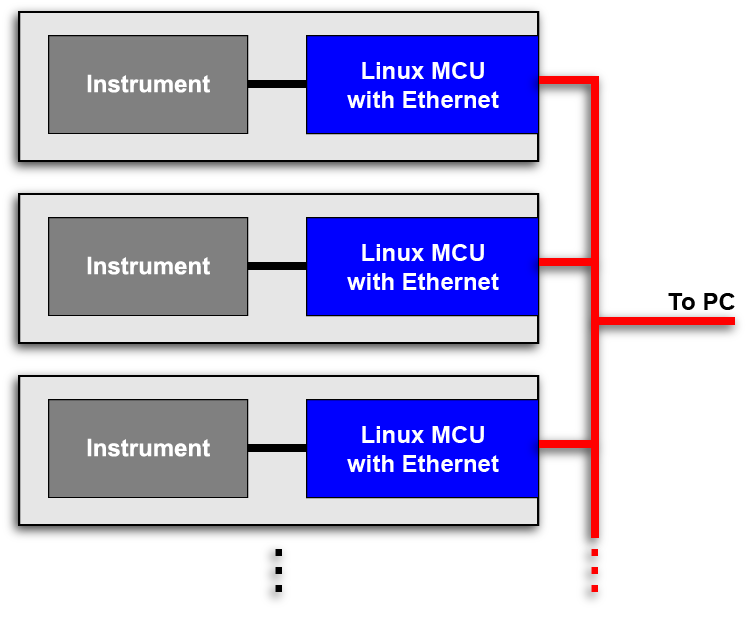

For the next generation of this troubled product, we decided to replace their proprietary hardware and communications with an off-the-shelf single board computer that cost a fraction of their old design, yet had way more capabilities. The included standard Ethernet was capable of 100 million bits per second, a 400 to 800 fold increase in speed over the CAN bus and throttled USB ports on the old board. And the PC would connect to the Ethernet bus, not a specific module, so all modules could be reached equally as fast. The revised communications bus structure is shown below:

Linux Operating System

The boards also ran Linux, the open source (and free) operating system that is already widely used in a lot of complex embedded systems like DVR’s and cable boxes. That gave us several big advantages.

We were able to completely replace the troubled proprietary protocol with standard Internet protocols (TCP/IP), all included with Linux. Since these protocols drive the Internet and local area networks, they are obviously well documented, robust and proven, and there are excellent tools (such as Wireshark) to debug any problems during development.

A more modern User Interface

Another huge advantage of Linux is that includes a web server. (This website runs on Linux.) My client’s old system was also burdened by a native Windows PC host application that nobody liked and had a number of its own problems. We created a new user interface with a web application hosted on one of the Linux boards on a private network (not on the Internet). Since the application now runs in a browser, it is cross-platform and can be used on Windows, Mac, Linux and any other device that has a browser, and it can now be viewed by more than one person at a time. With the addition of an integrated WiFi hub, the user interface is also available on tablets and smartphones for quick control and monitoring during a test. In fact, an iPad was included with the new system to highlight this useful feature.

The new product is a big success. The communication problems are gone, the speeds are fast, and the user interface is a big improvement. And the new architecture can serve as a platform for adding new modules and creating new products from different combinations for years to come.

Lessons

This story illustrates the value of a few themes that I promote:

- Take the time to design and properly vet a system on paper before you dive into the final implementation. It will save you time and headaches in the long run.

- Use a highly qualified and experienced person to design the system, or at least to review the plans.

- Write performance specifications and test to confirm they are met. It was embarrassing that the high-speed USB port was almost uselessly slow and made it to market like that.

- If the system is complicated, take the time to gather or create the tools to analyze it and prove that it is working according to spec during development. This client should have created a tool like Wireshark for their custom protocol to analyze the data on the bus. They would have quickly identified many of the problems and fixed them before the product was shipped.

- Consider using existing industry standards. They are proven, robust and well supported. There are times when a proprietary scheme is appropriate. But designing your own encryption algorithm or file transfer protocol is probably a bad idea.